Why your data pipelines keep breaking (and what to do about it)

If you're a data professional, chances are you've spent more time fixing pipelines than building them. It's not your fault. The truth is, modern data infrastructure is a moving target. APIs change. Schemas drift. Your pipeline isn’t broken because you lack skills, it’s broken because everything around it keeps shifting.

The root of the problem

Most pipelines are fragile by design. A single column rename, a changed endpoint response, or an undocumented API update can cause silent failures. Dashboards go stale. Reports break. And worse, nobody notices until someone makes a decision based on bad data.

The real cost? Hours of engineering time. Delayed insights. Eroded trust in your data team.

A real-world example

Let’s say you’re syncing ad spend data from Meta Ads. Your pipeline expects a field called spend_amount. One day, Meta updates their API, now it’s called spend_total. Your sync doesn’t fail visibly. It just stops pulling that field. The dashboard quietly shows zero spend. Your campaign budget decisions are now based on nothing.

It’s this kind of silent failure that causes the most damage. And it’s happening more often than most teams realize.

Why maintenance is harder than building

It feels great to spin up a new pipeline. You’re solving a problem, delivering value, checking boxes. But maintenance? That’s where complexity creeps in. Suddenly you’re dealing with versioning issues, retries, schema mismatches, and error handling logic.

Think of it like owning a car. Building the pipeline is buying it. Maintenance is the endless cycle of oil changes, tire rotations, and surprise repairs. It's less glamorous, but more critical.

DIY data pipelines vs. managed ETL tools

Many teams start with custom scripts or homegrown orchestration setups. They work until they don’t. As you scale, each pipeline adds to your cognitive load.

Some studies suggest that data engineers spend up to 44% of their time fixing broken pipelines. That’s time lost to maintenance. And it's not just time, it's opportunity cost.

Why ETL matters more than ever

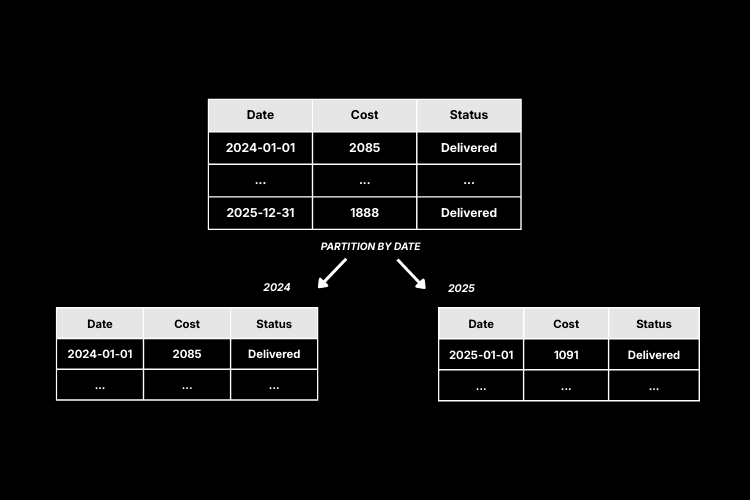

ETL (Extract, Transform, Load), is the backbone of any modern data stack. It brings together scattered, messy data from different sources and transforms it into something structured and usable in your data warehouse. But when APIs shift and schema changes catch you off guard, even the best-built ETL processes can break.

That’s where ETL automation comes in. Managed ETL platforms take over the heavy lifting, automatically adapting to changes in data sources, deduplicating entries, and optimizing performance. With features like schema drift detection, pre-aggregation, and no-code interfaces, they reduce operational risk and keep your pipelines running smoothly, without draining engineering time.

Whether you're working with marketing data, sales analytics, or finance reports, automating ETL helps you:

- Avoid pipeline failures from API changes

- Deliver clean, accurate data to your team

- Free up engineers to focus on high-impact projects

How to fix your data pipeline issues

Start by choosing ETL tools built for flexibility and change. Look for platforms that:

- Handle schema drift and API changes gracefully

- Provide transparency into sync status and errors

- Require minimal ongoing maintenance

If you're currently debugging custom SQL scripts or juggling multiple pipeline tools, it might be time to rethink your data integration approach.

How Weld handles fragile pipelines

At Weld, we’ve built our platform to reduce the burden of pipeline maintenance from day one. Here's how:

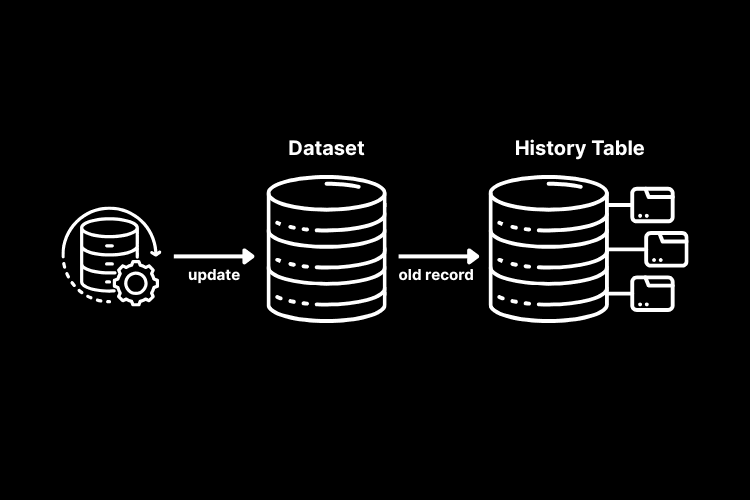

Automatic schema change handling: Weld detects and adjusts to schema changes in many popular data sources, so a renamed column doesn’t break your sync.

Sync observability: We make it easy to track sync health, view errors, and get notified when things go wrong.

Protected tables: Our “Protected Tables” feature gives you control over how changes are applied. You can review schema updates before they hit your warehouse, so your production models stay safe and reliable.

This combination of flexibility, visibility, and control helps teams avoid the most common failure points in modern data stacks.

Conclusion

You don’t need to settle for pipelines that constantly break. Ask yourself:

- How often do we troubleshoot data sync issues?

- What would it mean to reclaim 44% of our engineering time?

- Are our tools helping us scale, or holding us back?

Build fast. Maintain smarter. Your data deserves better!

Looking for the right ETL tool?

Check out our guide to the top ETL tools in 2025 a detailed comparison of data platforms to help you choose the one that fits your team, stack, and scale.