Introduction: Build vs Buy

Building data pipelines require extracting, transforming, and loading data into a central warehouse (usually a data warehouse). In today’s data-driven world, a robust ETL process is vital for data management and analytics.

However, maintaining data pipelines is very time consuming for multiple reasons. One primary challenge is related to to handling data volume and scalability, as with growing data, the pipelines need to process and move datasets efficiently that can burden storage or computing resources.

Another major challenge is related to data quality and the consistency across diverse sources. For example, schema drift is a common issue that can break down downstream transformations and cause pipeline failures.

Therefore many business analysts and decision makers face the dilemma of the decision point: Should we create an in-house ETL/Data Pipeline architecture or purchase on off-the-shelf product?

What are you trying to build?

Before starting, it is important to consider some of the fundamental definitions and concepts: APIs and data pipelines.

What is an API?

An Application Programming Interface (API) is a set of rules and mechanisms that enable two software components to communicate with each other using a set of definitions and protocols. APIs act as intermediaries, enabling one application to request data or functionality from another. Even though APIs facilitate access to data, they don’t necessarily manage the data’s journey from source to destination. This is why we need to talk about data pipelines.

What is a data pipeline?

Data pipeline refers to a set of tasks and processes that moves, transforms or serves data. Organizations often have a large volume of data from multiple data sources such as applications or other digital channels that needs to be transferred to another system in automated ways. You can think of data pipelines as a factory assembly line: raw data enters at the start, which goes through a series of steps, until finally we have clean, usable, analysis-ready data!

What is the difference between data pipelines and ETL pipeline?

Data pipelines move data from a source to a destination for analysis or visualization. As mentioned earlier, it gathers raw data, transforms it and stores it in a data warehouse or data lake. Data pipelines are designed to handle streaming data and process it in real time.

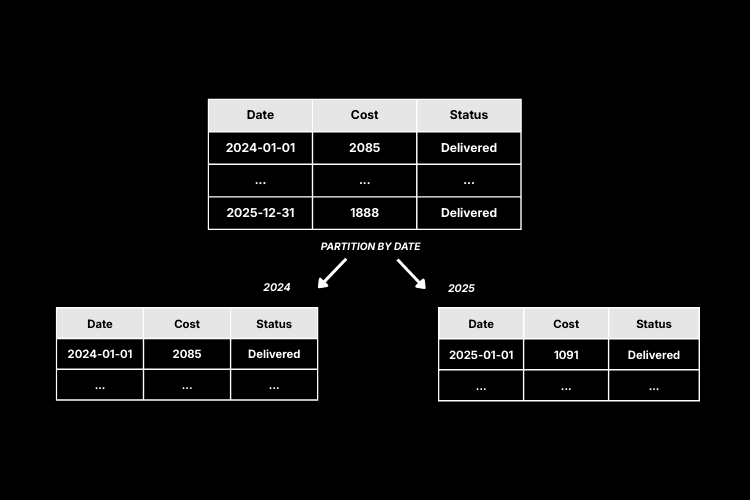

Extract, transform and load (ETL) pipeline is a special type of data integration pipeline. Such ETL tools pull raw data from multiple applications and sources (e.g. Shopify or Facebook Ads) and store it in a temporary location named as the staging layer. Then the data is transformed in this staging area which is then loaded into data warehouses. ETL pipelines are generally operate on batch data and run on scheduled intervals.

☝🏼Key considerations before making your decision

Before choosing the most suitable option, it’s important to consider various factors. Defining your business’s needs, capabilities and constraints is crucial for making this decision.

You can first start with assessing these points:

- Define clear goals and objectives:

- What does your business want to achieve with the data?

- What are the main challenges your business or the stakeholders need answer for?

- Are you aiming to gain insights, make predictions, generate reports, support decision-making

- Assess type of data and its volume: Are you working with large datasets, sensitive information, or subject to regulatory requirements?

- Think about the technical capacity and skills of the team:

- Do they suit more traditional or more modern solution?

- Is there a dedicated in-house data team for building pipelines, writing code, and managing workflows? If not, these ETL tasks fall as extra duties on other roles like data analysts, software engineers, or data scientists

- Can your team handle maintenance tasks like testing, documentation and optimization beyond the initial setup?

- Time and resource: Does your team have time to build and maintain a pipeline in-house? Or would outsourcing be more efficient?

- Business impact: Will your decision affect your product development or daily operations?

- Speed of execution: How fast do you need access to actionable data insights?

The Core Choices: Build vs Buy vs “Please Make It Go Away”

There is a lot more to address than just considering the prices of an ETL or in-house built pipeline. And here we will introduce why:

🔨 Option 1: Bespoke custom-made solution

To develop a custom pipeline it means that it is built in-house with tools such as Airflow, dbt or different custom scripts.

When a DIY solution may make sense

- Budgetary concerns

- You team is well-versed in tech and is more mature in handling issues

- Your current needs for a data pipeline do not require everything an ETL offers, or do require something specific that is not available through a commercial ETL tool.

- Where performance and specific transformations are critical

Advantages of building

- Offer a level of flexibility and performance: If your data integration is highly unique — hand-coding can offer you a better and cheaper option.

- Control and visibility: Offer a total control and visibility over your data and greater customizability option, as well as the ability to choose the infrastructure for scaling

- Cost effectiveness: cheaper than no-code ETL in most cases, as the business can outsource the ETL pipeline creation to an agency instead of hiring/training own staff.

- High data accuracy: Higher data accuracy for code-based ETL

Disadvantages of building

- Development process: Writing code from scratch is time consuming and given that an ETL pipeline is an integral and highly reliable part of business automation, there is no room for error

- Scalability: With data volume growing, business must optimize for speed and memory usage

- Performance: Batch and stream processing can add architectural complexity

- Hard to operate for non-technical business members: It is likely that your manual ETL pipeline will be optimized and managed by an expert in-house, thus it will be complicated for other non-technical colleagues to navigate and understand.

- Code maintenance: Depending on the nature of your business, some issues needs quick and smooth resolution which can be challenging.

💰 Option 2: ETL by tool

A no-code or low-code ETL pipeline which compared to in-house built pipeline requires less technical expertise, is simple to use and generally easy to navigate. When buying a third-party ETL tool a business typically purchases or subscribes to a software product. You can check the best ETL tools on the market on our blog: Best ETL tools in 2025.

When buying makes sense:

- If your organization is looking for ease of use and rapid development — with features like built-in dashboards and failure alerts that help business teams stay up-to-date — it's generally better to opt for buying a tool.

- For business environments where non-technical users or cross-functional teams needs to be involved it is also a good choice as this way business teams can start using the data for decision-making without waiting for engineers to build everything from scratch.

Advantages of buying:

- Easy operation: Quick implementation, fast setup, lower maintenance effort compared to manual ETL

- High computing power for large pipelines: If your business is dealing with large datasets and is required for fast deployment, no code ETL pipelines will likely not cause speed and lagging issues.

- Easy UI: Not only setting up an ETL tool is easy but the day-to-day management too. All data source and workflows are transparent which non-technical business partners can also understand.

- Workflow management is easier: Handle data complexities for your team and freeing up them to focus on higher-value tasks.

- Support and community: Established ETL tools often come with support, documentations, and a support engineering team for troubleshooting.

Disadvantages of buying

- Limitations: Isn’t as customizable as manual code ETL pipelines such as in terms of connectors

- Scalability challenge: Can get expensive at scale or when a business is in need of special consultancy

Other options: ‘Go away abstract Mindset’ and ‘The Hybrid option’

There are also other approaches that businesses can rely on. One strategic approach a business can take is the ‘Go away abstract mindset’. In that case decision makers move away from high-level, theoretical thinking and shifting toward more practical, concrete decision-making. Thus, whenever a business would assess their data management they would base discussions on real business needs, like examining specific use cases or actual technical constraints.

In this case the decision-makers would ask questions such as:

- Specific business goals: e.g. what decision needs to be made?

- Requirements: e.g. data sources that are needed or granularity of data

Another option a business can ultimately take is a hybrid option. This can be effective where tools are used for more standard tasks and a bespoke solution is utilized for more specialized processes.

Build vs Buy comparison table

| Criteria | Build | Buy (ETL tool) |

|---|---|---|

| Setup time | Long | Medium |

| Flexibility | High | Medium |

| Cost (short-term) | Medium | High |

| Cost (long-term) | High | Depends |

| Maintenance burden | High | Low |

| Ideal for... | Data-driven organizations with unique needs | Scaling organizations with common needs |

It’s good to know however…

There’s no one-size-fits-all solution. You can mix approaches: building your core pipelines in-house while buying connectors or tools for other tasks. The best choice always depends on your business’s context, capabilities, and long-term strategy.

Curious how Weld fits in?

Weld offers a Low-code ETL pipeline that utilizes visual interfaces, drag and drop functionality.

Weld helps you:

- Extract and sync data from tools like HubSpot, Shopify, Stripe, and Google Ads

- Use AI to create custom metrics from your raw data

- Push modeled, clean data into any warehouse or lake destination

In addition to that:

- Offers managed services: Weld helps customers with assistance regarding pipeline setup and ongoing maintenance.

- Quick online support: Weld offers a support online chat for customers from rapid troubleshooting to offering best practices. The support chat is also integrated FAQ questions with built-in AI for express troubleshooting.

- Migration support: Offer free Fivetran migration, making it easier than ever to switch and scale.

And when the data is reliable, connected, and well-documented, then your analytics and AI initiatives can thrive. 🤝

"I don’t have a technical background, but Ed has really helped me learn SQL. It’s like a calculator – I use it for brainstorming data models, troubleshooting queries, and getting quick suggestions. It’s made working with data a lot easier."

— Jacob Poulsen, Head of Marketing Expansion, Flatpay

Sources: