In the everyday world of data analytics, most users don’t think about API row limits until, one day, you unexpectedly hit a wall. What happens when you reach the infamous daily cap of 50,000 rows with the Google Search Console API? Where is the rest of your data? Why does this happen? And how can you avoid it in the future? In this article, we’ll break down what this limit is, how to recognize it, and offer some practical takeaways for your reporting workflows.

What Is the 50,000 Row Limit?

Google Search Console API does not return more than 50,000 rows per day per search type (web, image, video, etc.). This is a hard technical limit: if your site generates tons of unique query/page/device/country combinations for a day, only the “top” 50,000 (by clicks) are exposed.

This is easy to miss for smaller sites and, for many users, daily rows stay well under the maximum. But if your site gets a spike in crawling, or you run very granular queries across different dimensions, it is possible for the API to cut off excess data.

The impact of this limitation can be significant, especially for larger websites or those with diverse content. Missing data can lead to incomplete analyses and potentially misguided business decisions.

Real-World Example: Visualizing the Row Limit

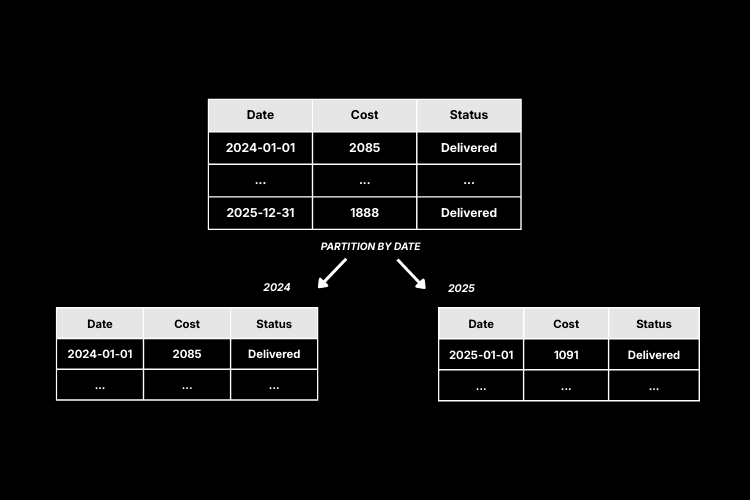

Let's say you run a couple of simple SQL queries to explore your Google Search Console data. You might see something like this:

- Unfiltered Data: Well Above the Limit

Above, we see a generic query against the raw analytics table. It shows a much larger number of total rows in the dataset than the 50,000 limit of Google's API. No row cap yet, but we'll look into why this happens a bit later.

- Single-Day Data: The Cap in Action

When we filter for a single day with high activity (in this example, June 1, 2025), the query returns exactly 50,000 total rows. This aligns perfectly with the official documentation: the API will not deliver more than this for any single day, regardless of underlying data volume.

Why Does This Happen?

There are a two key reasons why you might hit this limit:

- Data granularity: The more dimensions you include (page, query, device, country), the more rows you generate per day. You might have millions of unique combinations, but the API will only return the top 50,000 by clicks.

- API constraints: Google Search Console will cut off at 50,000 rows per day, per search type, even if your underlying traffic far exceeds it. A single day with high activity can easily max out this limit, or if you run queries that generate many unique combinations, you may hit the cap even on days with lower traffic.

What Can You Do?

Despite this hard limit on the API, there are several strategies you can use to work around it:

- Monitor daily row counts: Track when your daily queries return close to the 50,000 row limit. This is a signal that the API may be truncating data for that day, and you may want to adjust your query granularity or date ranges. You can, for example, add a column in your SQL queries that flags whether a specific day is close to or reaching this limit to easily identify such cases.

- Optimize dimensions: If you’re running into the limit, consider narrowing the query—fewer dimensions, or adding filters on key metrics.

- Aggregate over date ranges: For summary purposes, pulling data across weeks/months lets you bypass daily caps, by adding up the 50,000 row limit from each day. For example, for a 3-month period, you could potentially access up to 4.5 million rows of data. This is also what we see in the first example above, where the unfiltered query returns a much larger number of rows than the daily limit.

- Document and annotate: If you are sharing results, make it clear that 50,000 rows per day is the maximum. This helps stakeholders understand the context of your data and why some queries may not return all expected results.

Final Thoughts

Hitting the row limit is a good sign: it means your site (or site portfolio) is producing enough granular search data to max out Google’s API Search Analytics method for that day. Keep an eye on your metrics, optimize your queries, and be aware of this technical nuance as you build reports or dashboards for yourself and stakeholders.